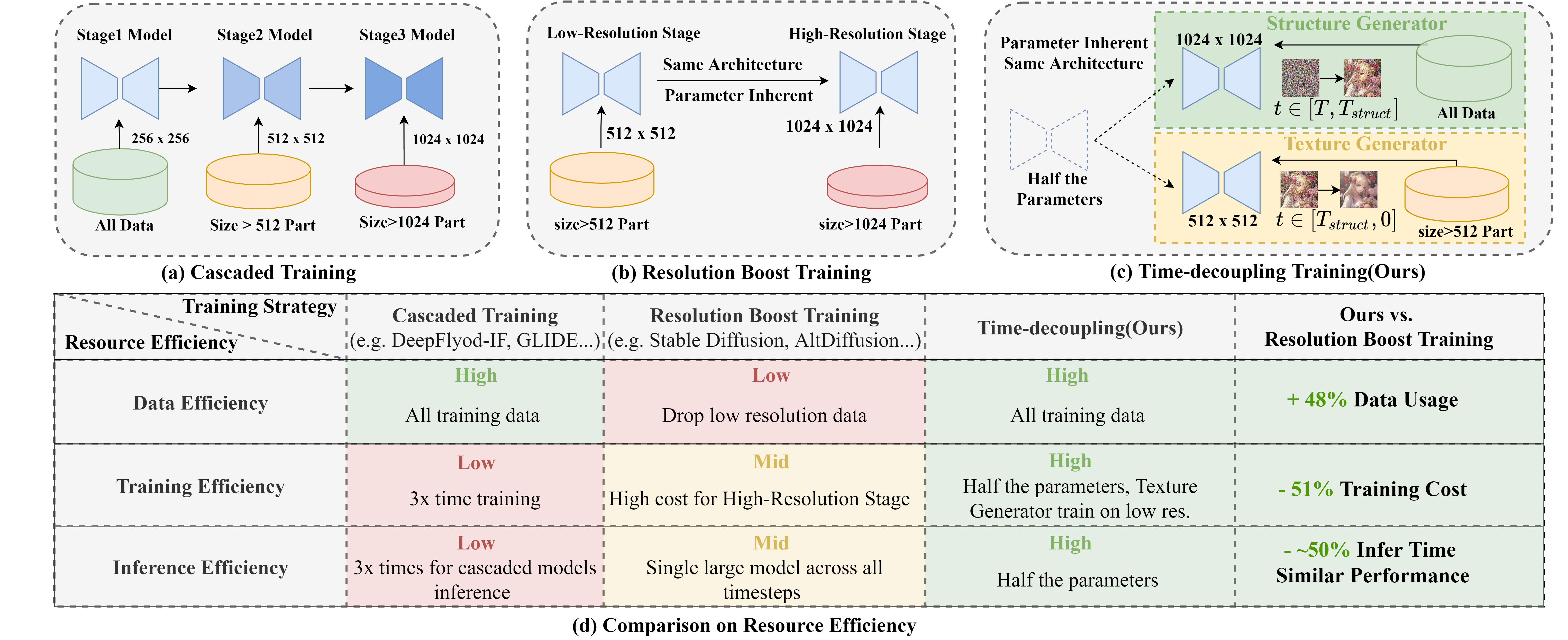

Current large-scale diffusion models represent a giant leap forward in conditional image synthesis, capable of interpreting diverse cues like text, human poses, and edges. However, their reliance on substantial computational resources and extensive data collection remains a bottleneck. On the other hand, the integration of existing diffusion models, each specialized for different controls and operating in unique latent spaces, poses a challenge due to incompatible image resolutions and latent space embedding structures, hindering their joint use. Addressing these constraints, we present "PanGu-Draw", a novel latent diffusion model designed for resource-efficient text-to-image synthesis that adeptly accommodates multiple control signals. We first propose a resource-efficient Time-Decoupling Training Strategy, which splits the monolithic text-to-image model into structure and texture generators. Each generator is trained using a regimen that maximizes data utilization and computational efficiency, cutting data preparation by 48% and reducing training resources by 51%. Secondly, we introduce "Coop-Diffusion", an algorithm that enables the cooperative use of various pre-trained diffusion models with different latent spaces and predefined resolutions within a unified denoising process. This allows for multi-control image synthesis at arbitrary resolutions without the necessity for additional data or retraining. Empirical validations of Pangu-Draw show its exceptional prowess in text-to-image and multi-control image generation, suggesting a promising direction for future model training efficiencies and generation versatility. The largest 5B T2I PanGu-Draw model is released on the Ascend platform.

We introduce the Time-Decoupling Training Strategy, which divides a comprehensive text-to-image model into two specialized sub-models: a structure generator and a texture generator. The structure generator is responsible for early-stage denoising across larger time steps and focuses on establishing the foundational outlines of the image. Conversely, the texture generator operates during the latter, smaller time steps to elaborate on the textural details. Each generator is half the size of the original and is trained in isolation, which not only alleviates the need for high-memory computation devices but also avoids the complexities associated with model sharding and its accompanying inter-machine communication overhead. Furthermore, the structure generator is trained with high-resolution images and upscaled lower-resolution ones, achieving higher data efficiency and avoid the problem of semantic degeneration; and the texture generator is trained at a lower resolution while still sampling at high resolution, achieving an overall 51% improvement in training efficiency.

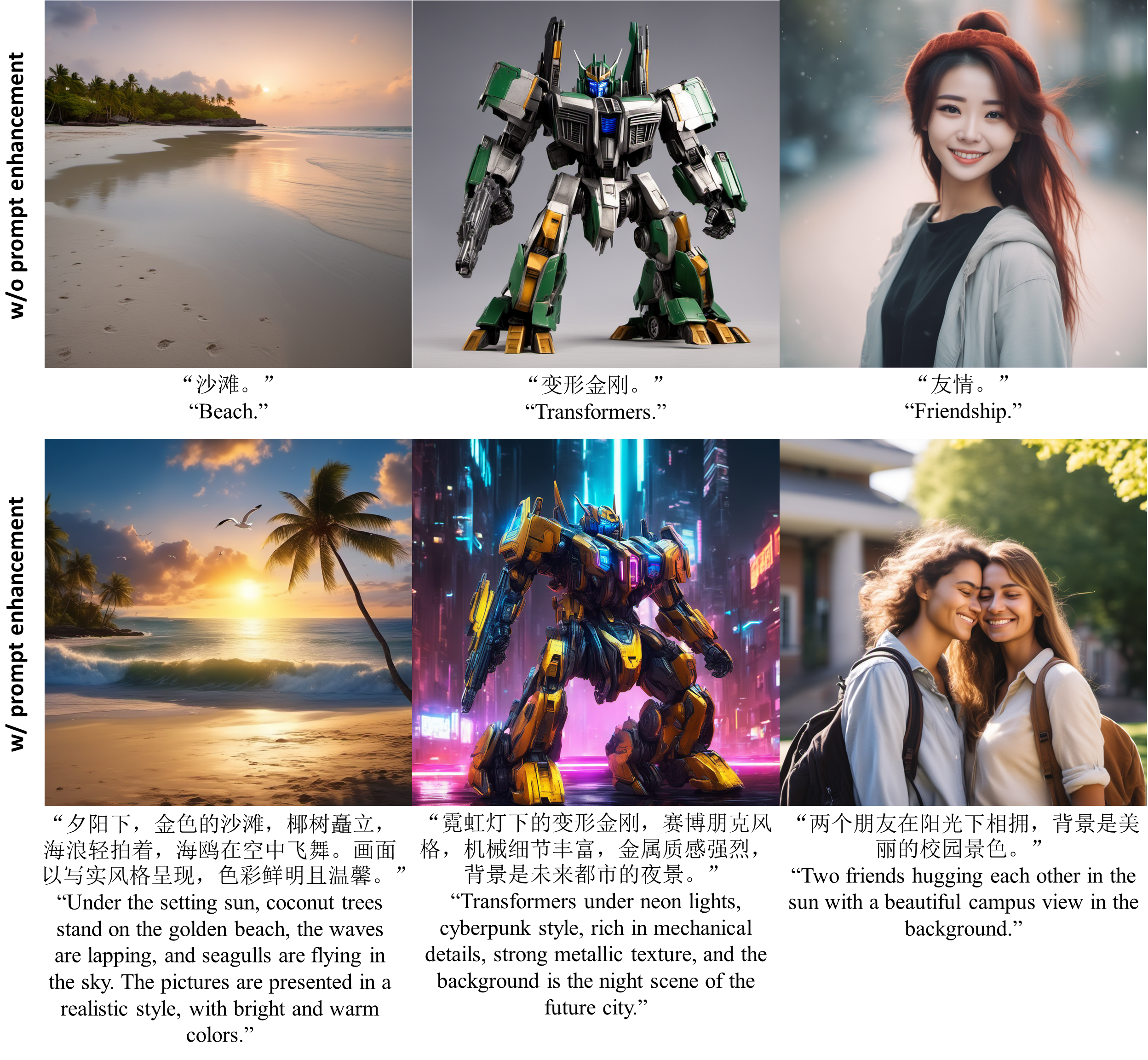

To further enhance our generation quality, we harness the advanced comprehension abilities of large language models (LLM) to align users’ succinct inputs with the detailed inputs required by the model. Initially, we finetune the LLM using a human-annotated dataset, transforming a succinct prompt into a more enriched version. Subsequently, to optimize for PanGu-Draw, we employ the Reward rAnked FineTuning (RAFT) method, which selects the prompt pairs yielding the highest reward for further fine-tuning.

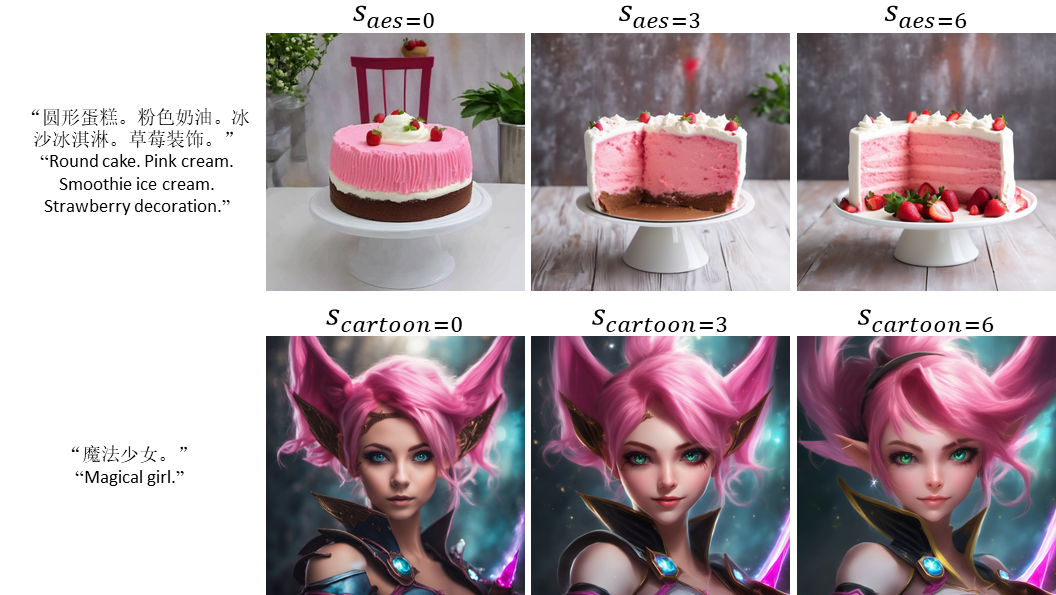

While techniques like LoRA allow one to adapt a text-to-image model to a specific style (e.g., human-aesthetic-preferred style, cartoon style), they do not allow one to adjust the degree of the desired style. Inspired by the classifier-free guidance mechanism, we propose to perform controllable stylized text-to-image generation by prepending a special prefix to the original prompt of human-aesthetic-prefer and cartoon samples, denoted as \(c_{aes}\) and \(c_{cartoon}\) respectively, during training. During sampling, we extrapolated the prediction in the direction of \(\epsilon_\theta(z_t, t, c_{style})\) and away from \(\epsilon_\theta(z_t, t, c)\) as follows:

\(\hat{\epsilon}_{\theta}(z_t, t, c) = \epsilon_{\theta}(z_t, t, \emptyset) + s \cdot ({\epsilon}_{\theta}(z_t, t, c) - \epsilon_{\theta}(z_t, t, \emptyset)) + s_{style} \cdot ({\epsilon}_{\theta}(z_t, t, c_{style}) - \epsilon_{\theta}(z_t, t, c)) \),

where \(s\) is the classifier-free guidance scale, \(c_{style} \in \{c_{aes}, c_{cartoon}\}\) and \(s_{style}\) is the style guidance scale.

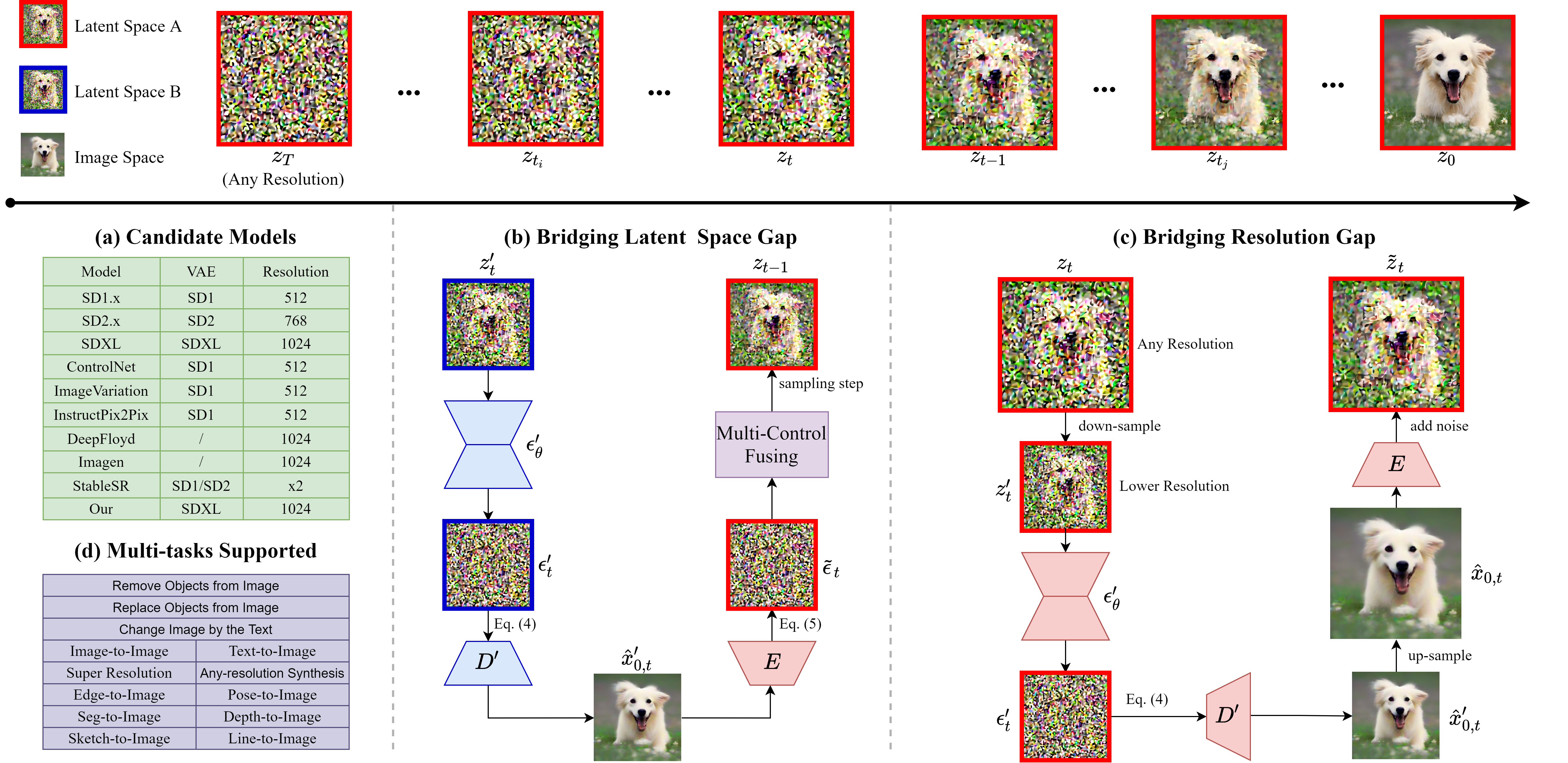

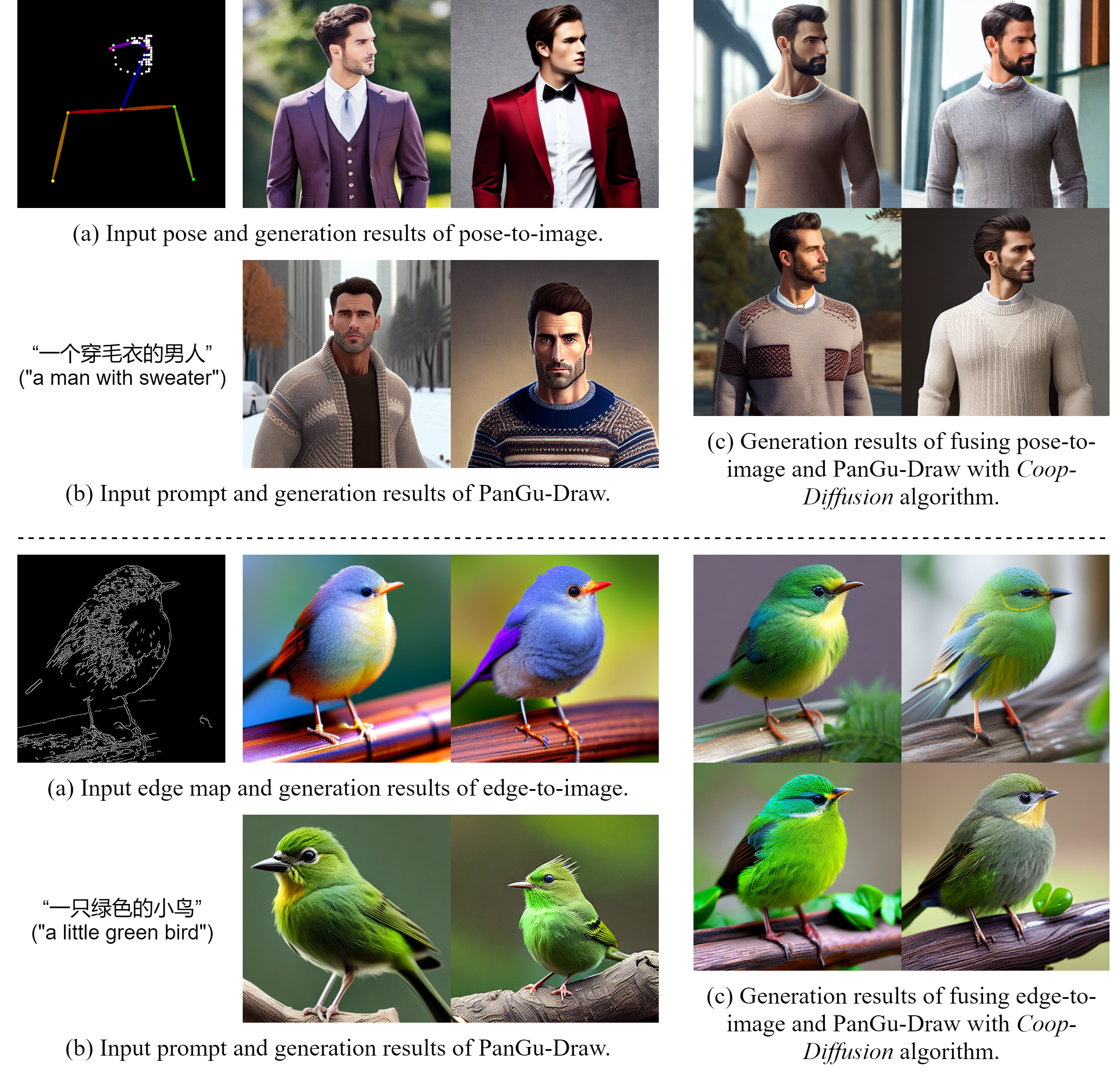

We propose the Coop-Diffusion algorithm to fuse diverse pre-trained diffusion models for multi-control or multi-resolution image generation without training a new model. (a) Existing pre-trained diffusion models, each tailored for specific controls and operating within distinct latent spaces and image resolutions. (b) This sub-module bridges the gap arising from different latent spaces by transforming the model prediction \(\epsilon_t'\) in latent space B to the target latent space A as \(\tilde\epsilon_t\) using the image space as an intermediate. (c) This sub-module bridges the gap arising from different resolutions by performing upsampling on the predicted clean data \(\hat{x}_{0,t}'\).

| Comparisons of PanGu-Draw with English text-to-image generation models on COCO dataset in terms of FID. Our 5B PanGu model is the best-released model in terms of FID. | Comparisons of PanGu-Draw with Chinese text-to-image generation models on COCO-CN dataset in terms of FID, IS and CN-CLIP-score. |

@article{lu2023pangudraw,

title={PanGu-Draw: Advancing Resource-Efficient Text-to-Image Synthesis with Time-Decoupled Training and Reusable Coop-Diffusion},

author={Guansong Lu and Yuanfan Guo and Jianhua Han and Minzhe Niu and Yihan Zeng and Songcen Xu and Zeyi Huang and Zhao Zhong and Wei Zhang and Hang Xu},

journal={arXiv preprint arXiv:2312.16486},

year={2023}

}